Support #5959

closedXSLT 3.0 streaming results in OutOfMemoryError

0%

Description

Hello,

I have a big XML file and I tried to split it with a xsl 3.0 including streaming in smaller files to process.

Here is my Java Code:

StreamingTransformerFactory streamingTransformerFactory =

XmlTransformerHelper.createStreamingTransformerFactory();

Templates streamingTemplates =

XmlTransformerHelper.createTemplate(

streamingTransformerFactory,

getClass()

.getClassLoader()

.getResourceAsStream(RESOURCE_URL_XSL_SPLIT_BIG_ONIX_STREAM));

okFile = new File(targetDirectory.toString(), "dummy.xml");

streamingTemplates

.newTransformer()

.transform(new StreamSource(bookStream), new StreamResult(okFile));

Here is my XSL:

<xsl:stylesheet version="3.0" xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

exclude-result-prefixes="#all"

xmlns:s="http://www.book.org/book/3.0/short"

xmlns:saxon="http://saxon.sf.net/"

>

<xsl:mode streamable="yes" on-no-match="shallow-copy" use-accumulators="#all"/>

<xsl:output indent="yes"/>

<xsl:accumulator name="header" as="element()?" initial-value="()" streamable="yes">

<xsl:accumulator-rule match="s:header" phase="end" saxon:capture="yes" select="."/>

</xsl:accumulator>

<xsl:template match="s:Entry">

<xsl:result-document href="{position()}.xml" method="xml">

<xsl:element name="{name(ancestor::*[last()])}" namespace="{namespace-uri(ancestor::*[last()])}">

<xsl:copy-of select="accumulator-before('header')"/>

<xsl:copy-of select="."/>

</xsl:element>

</xsl:result-document>

</xsl:template>

</xsl:stylesheet>

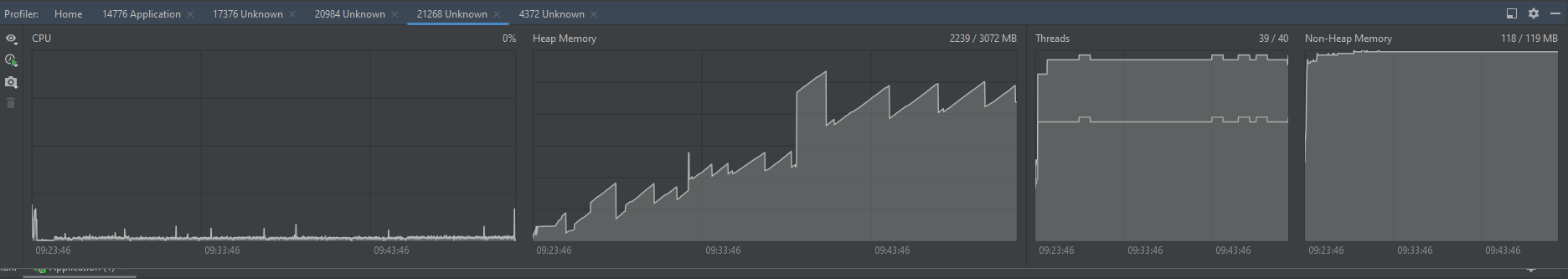

It works fine for small XML files like 200 MB but for a 2 GB XML file I got a OutOfMemoryError (refering to the attachment  ).

).

I startet my Spring Boot Application with this VM arguments "-Xms1G -Xmx3G".

My expectation would have been that with streaming a poor memory processing would have came up.

Is it a wrong assumption or did I do something wrong?

Files

Updated by Michael Kay over 1 year ago

Updated by Michael Kay over 1 year ago

I can't see any obvious reason for this.

Is it possible for you to look at a heap dump and identify what objects are taking up all the space?

Otherwise, if you can supply us some kind of repro (it doesn't have to be a full 2Gb file) we'll try investigating it at this end.

Updated by Mark Hansen over 1 year ago

Updated by Mark Hansen over 1 year ago

- File S3ChunkedStream.java S3ChunkedStream.java added

When I created a separate test project with the same transformation logic, I noticed that it worked.

The only difference is that in the test project the XML is read from a FileInputstream and in the other from a LazySequenceInputStream.

I have attached the LazySequenceInputStream Class which we use.

Do you have any clue or is it out of scope?

Updated by Michael Kay over 1 year ago

Updated by Michael Kay over 1 year ago

I think you need to take a look at the memory behavior of that LazySequenceInputStream. I can't really assess it: it's possible, for example, that getContentLength() or getUserMetaData() reads the whole file into memory.

Updated by Michael Kay over 1 year ago

Updated by Michael Kay over 1 year ago

- Status changed from AwaitingInfo to Closed

I'm closing this because we asked for more information and received no response. Please feel free to reopen it, or raise a fresh issue, if you need further help.

Please register to edit this issue